The last two days have certainly be challenging for us and for our clients. As you may know, our system has been sluggish for the last two days and at times your website may not have loaded for a few minutes. Here is an explanation of what happened.

Starting on Wednesday, May 26 at about 5:30am one of our client's websites started to experience a DDOS (Distributed Denial of Service) attack. Essentially this was a massive flood of traffic to a single page on their website. We got notified of it pretty quickly and started to investigate. In a situation like this where sites are up and down it's challenging to pinpoint the issue, but we soon did.

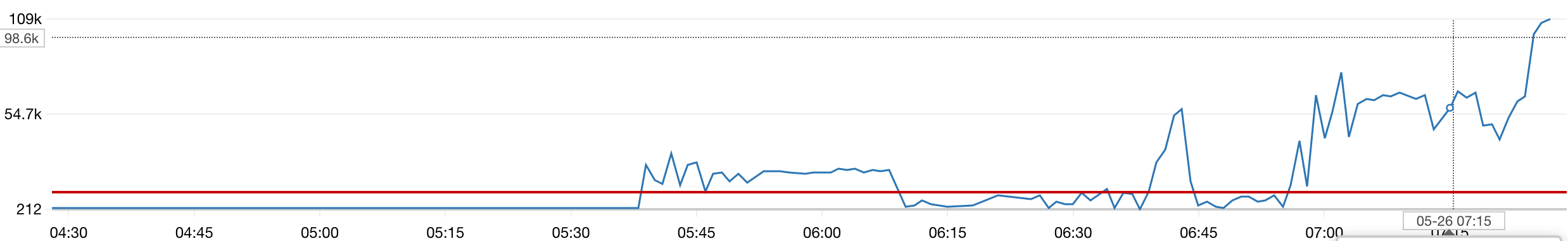

The quick solution was to turn that site off. That helped and got things back up and running early Wednesday morning. But the system was still being flooded with requests. As you can see in the chart below the traffic started to spike a little after 5:30 am EST and by 7:00 am really ramped up. The red line is 10,000 requests / minute. We're typically around 1,000 - 4,000 depending on the day and hour. The highest mark there is over 100,000 requests per minute. A large jump up from what we typically get.

Our system is scalable and it tried to scale, but the sudden surge brought things down for a little bit Wednesday morning. We turned off the website that was under attack (this is allowed under our terms of service to protect the rest of our system) and manually scaled up our servers.

To better protect our system we put some rules in place on our edge servers to block requests to the offending site. The configuration worked and sites got more stable.

By Wednesday afternoon we decided to try and bring back the site that was under attack because we felt that our blocking configuration was working well. For the most part it was, and other than a short hiccup, the system handled the increased load with that site live again.

However, by about 11:00 pm Wednesday evening, we started to receive occasional brief outage reports from our monitoring systems. By 3:30 am it was worse and we dug into things again. We also had to turn the site that was under attack back off and resume blocking all requests to it.

Unfortunately, today was worse than yesterday. It appeared that the attack had increased and our blocking configurations were not working as well as they could. We spent quite a bit of time scaling our system up more, talking with different vendors to try and find a solution, and getting direct support from Amazon Web Services (which is where our system is located).

Then, around 2:30pm today, due to a configuration error on our edge servers everything went down for about 40 minutes. Once we figured out the issue we rebuilt the edge servers and by 3:10pm or 3:15pm the system was back up. By about 3:30 the system was as stable as it was before the attack, even with increased traffic.

Throughout the day we also worked with the customer whose website was under attack to get their DNS moved to CloudFlare to take advantage of their DDOS services. That happened late this afternoon. We're still monitoring their DNS situation and plan to have their site live again by the morning at the latest to allow the DNS changes to fully propagate.

Lots of lessons were learned over the last two days and we're going to take some time to reflect on how we can improve our system and improve our resiliency. We have a call with another vendor tomorrow to provide a more enterprise solution to our edge servers to increase capacity and protection.

I sincerely thank all of our customers for their patience and understanding. This type of thing is hard to deal with and we've learned a lot.

If you have any questions at all, or need additional information, please don't hesitate to contact our support team.

Sincerely - Eric Tompkins, BranchCMS Founder